We’ve all had that moment when a once-reliable system starts to feel… off. Maybe your phone’s autocorrect goes rogue, or your favorite music app starts serving up baffling recommendations. In the world of Artificial Intelligence, this slow, silent erosion of performance has a name: drift.

Imagine your AI model as a finely tuned engine, trained on mountains of data to perform a specific task—whether it’s predicting customer behavior, detecting spam, or navigating traffic. But the world isn’t static. Preferences shift, adversaries evolve, and environments change. When the data your model encounters begins to diverge from its training distribution, performance starts to slip. That’s drift.

The Many Faces of Drift

Drift isn’t one-size-fits-all. It comes in several flavors:

- Concept Drift: The relationship between input data and target predictions changes.

- Data Drift: The statistical properties of incoming data shift over time.

- Upstream Data Drift: External systems feeding your model start behaving differently.

Regardless of type, the result is the same: your AI begins to misfire.

From Symptoms to Strategy: Enter Game Theory

Drift tells us what’s happening. But it doesn’t always explain why. That’s where game theory—the study of strategic interactions—enters the picture.

In many real-world applications, AI models aren’t operating in isolation. They’re embedded in dynamic ecosystems, interacting with other agents—humans, machines, or environments—that make strategic decisions of their own. These interactions can cause drift.

Let’s explore a few examples:

The Cat-and-Mouse Game of Fraud Detection – An AI model flags suspicious credit card transactions. But fraudsters adapt, constantly evolving their tactics to evade detection. This adversarial dynamic creates drift. Game theory helps us model this strategic back-and-forth, anticipate attacker behavior, and build more resilient defenses.

The Strategic User of Recommendations – Streaming platforms use AI to suggest content. But users aren’t passive—they respond strategically to recommendations, explore new genres, or binge-watch trends. As preferences shift, the data drifts. Game theory can model this interaction, helping platforms adapt and personalize more effectively.

The Dance of Autonomous Agents – In a smart city, self-driving cars must navigate traffic while reacting to each other. If traffic patterns change, each vehicle’s decision-making must evolve. This multi-agent system is a classic game-theoretic scenario. Understanding these dynamics helps mitigate drift and improve coordination.

From Detection to Understanding

Drift detection is essential—but it’s only the first step. By applying game theory, we can uncover the strategic causes behind drift. This deeper insight allows us to design AI systems that anticipate change, adapt intelligently, and remain robust in dynamic environments.

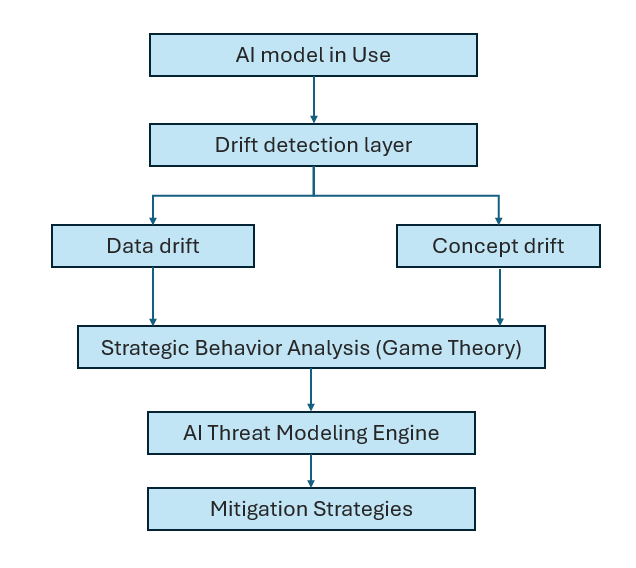

Bridging to Threat Modeling

This perspective aligns closely with my AI Threat Modeling Framework, where I explore how adversarial behavior, strategic adaptation, and environmental shifts impact AI reliability. By integrating drift analysis with game-theoretic modeling, we can proactively defend against threats and build systems that thrive in the wild.

This AI Threat Modeling Framework is built to anticipate, analyze, and mitigate risks in intelligent systems. It’s not just about patching vulnerabilities—it’s about understanding the strategic landscape in which the AI operates. This is where drift and game theory become powerful lenses.

Drift as a Threat Signal

Drift isn’t merely a performance issue—it’s often a symptom of adversarial or strategic pressure. In this framework, drift can be treated as a dynamic threat indicator, pointing to:

- Adversarial adaptation (e.g., fraudsters evolving tactics)

- User behavior shifts (e.g., strategic engagement with recommender systems)

- Environmental volatility (e.g., changing traffic patterns in autonomous systems)

By integrating drift detection into this threat modeling pipeline, we can not just monitor model health—we can track the evolving behavior of other agents in the system.

Game Theory as a Modeling Tool

Game theory provides the strategic scaffolding to understand why drift occurs. This framework can incorporate game-theoretic models to:

- Simulate adversarial evolution: Predict how attackers might adapt to your defenses.

- Model multi-agent interactions: Understand how autonomous systems co-adapt in shared environments.

- Design incentive-aware systems: Align user behavior with system goals to reduce strategic drift.

This shifts threat modeling from static checklists to dynamic scenario planning, where agents are rational, adaptive, and sometimes adversarial.

Operationalizing the Bridge

Here’s how we could explicitly connect the dots in this framework:

| Component | Role of Drift | Role of Game Theory | Integration Strategy |

|---|---|---|---|

| Threat Identification | Detect performance degradation due to data shifts | Identify strategic agents causing drift | Use drift metrics as triggers for deeper strategic analysis |

| Threat Modeling | Classify drift types (concept, data, upstream) | Model agent strategies and payoffs | Build game-theoretic simulations to forecast threat evolution |

| Mitigation Planning | Adapt models to new data distributions | Design counter-strategies and incentives | Use Nash equilibria or regret minimization to guide model updates |

| Monitoring & Feedback | Continuous drift detection | Strategic behavior tracking | Integrate feedback loops that adjust based on agent responses |

This approach transforms this framework into a living system—one that doesn’t just react to threats but anticipates and adapts to them.

By embedding drift and game theory into the AI Threat Modeling Framework, we can not just diagnose why your AI goes astray—but we can build the strategic intelligence to keep it on course.

Next time your AI starts to go astray, don’t just patch the symptoms—think like a strategist. Because in the game of intelligence, adaptation isn’t a bug. It’s the whole game.

Leave a comment