Overview

Have you ever wanted to ask questions about a document and get instant, intelligent answers — like chatting with the PDF itself? That’s exactly what I built today: a chatbot that reads a PDF, understands its content, and answers questions using Azure OpenAI and LangChain.

In this post, I’ll walk you through:

- The goal of the project

- The technologies behind it

- Step-by-step code to build your own

Project Goal

The goal was to create a chatbot that:

- Loads a PDF document

- Breaks it into chunks and embeds them as vectors

- Stores those vectors in a searchable database

- Uses GPT-4 (via Azure OpenAI) to answer questions based on the document

This is a classic example of retrieval-augmented generation (RAG) — combining search with generative AI.

Technologies Used

LangChain

LangChain is a framework for building applications powered by language models. It helps you connect models, prompts, memory, and tools into a single pipeline.

In this project, I used:

ChatPromptTemplateto format the promptAzureChatOpenAIto connect to GPT-4Chromaas the vector storePyPDFLoaderto read the PDF (you can choose to use loaders for other formats as well, like text files).

Embedding Vectors

Embeddings are how we turn text into numbers — specifically, high-dimensional vectors that capture meaning. Similar texts have similar vectors.

Azure OpenAI provides an embedding model that converts each chunk of the PDF into a vector. These vectors are stored in Chroma and used to retrieve relevant content.

LLM (Large Language Model)

GPT-4 is the language model used to generate answers. It reads the question and the retrieved context, then responds in natural language.

Azure OpenAI gives you secure, scalable access to GPT-4 via cloud APIs.

Step-by-Step Code

1. Install Dependencies

pip install -U langchain langchain-openai openai chromadb pypdf tiktoken

2. Import libraries

from langchain_openai import ChatOpenAI

# from langchain_community.document_loaders import TextLoader

from langchain_community.document_loaders import PyPDFLoader

from langchain_community.vectorstores import Chroma

from langchain_community.embeddings import OpenAIEmbeddings

from openai import AzureOpenAI

from langchain_core.embeddings import Embeddings

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import AzureChatOpenAI

from langchain_core.runnables import RunnablePassthrough

3. Set Up Azure OpenAI Client

# Step 3: Set up Azure OpenAI client

az_endpoint = "https://your-resource-name.openai.azure.com"

a_version = "your-azure-openai-api-version" # example = 2023-07-01-preview

client = AzureOpenAI(

api_key="your-azure-api-key",

api_version=a_version,

azure_endpoint=az_endpoint

)

chat_deployment = "gpt-4o-mini"

embedding_deployment = "text-embedding-ada-002"

4. Create a Custom Embedding Wrapper

# Step 4: Create a LangChain-compatible embedding wrapper

class AzureEmbeddingFunction(Embeddings):

def __init__(self, client, model):

self.client = client

self.model = model

def embed_documents(self, texts):

response = self.client.embeddings.create(

input=texts,

model=self.model

)

return [r.embedding for r in response.data]

def embed_query(self, text):

response = self.client.embeddings.create(

input=[text],

model=self.model

)

return response.data[0].embedding

Why You Need a Custom Embedding Wrapper?

LangChain expects embedding models to follow a specific interface — with two methods:

embed_documents(texts: List[str]) → List[List[float]]embed_query(text: str) → List[float]

However, Azure OpenAI’s embedding API returns a response object with nested data, not just raw vectors. So:

- The wrapper extracts the actual embeddings from the response

- It formats them correctly for LangChain

- It ensures compatibility with vector stores like Chroma

Without this wrapper, LangChain wouldn’t know how to use Azure’s embeddings — and you’d get errors or broken retrieval.

5. Load PDF and Create a Fresh Vector Store

# Step 5: Load your PDF and create a fresh vector store

# Load new PDF

pdf_path = "your_document.pdf"

loader = PyPDFLoader(pdf_path)

documents = loader.load()

# Create embedding function

embedding_function = AzureEmbeddingFunction(client, model=embedding_deployment)

# Create a truly fresh, in-memory vector store

db = Chroma.from_documents(

documents,

embedding=embedding_function,

collection_name="temp_collection", # Use a unique name to avoid reuse

persist_directory=None # No persistence

)

retriever = db.as_retriever()

6. Set Up Prompt and Chat Model

# Step 6: Set up prompt and chat model

prompt = ChatPromptTemplate.from_messages([

("system", "Use the following context to answer the question. If the answer isn't in the context, say you don't know."),

("user", "Context:\n{context}\n\nQuestion: {question}")

])

llm = AzureChatOpenAI(

api_key="your-azure-api-key",

azure_endpoint= "your-azure-openai-model-endpoint",

deployment_name="your-deployment-name", # example = gpt-4o-mini

api_version="your-api-version" # example = 2025-01-01-preview

)

# Build the chain

chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| llm

)

7. Ask a Question

# Step 7: Ask a question

response = chain.invoke("What is the summary of this document?")

print(response.content)

You can try different questions in above code, like:

- How many sub-headings are there in the document?

- How many pages are there in the document?

- What is the summary of the last page of the document?

- etc.

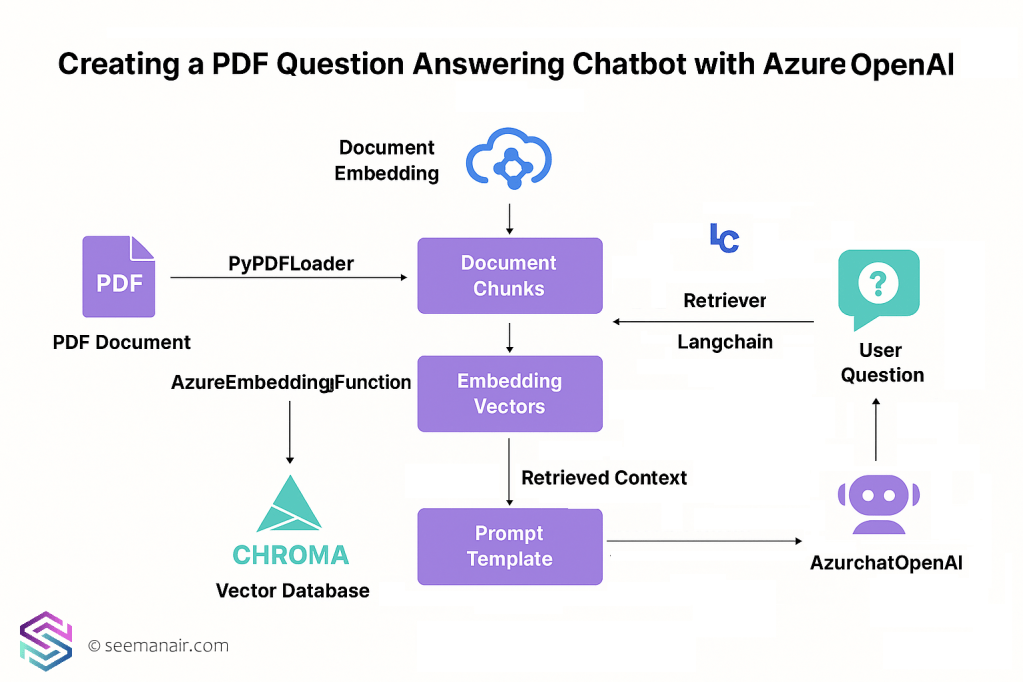

Architecture & Flow

1. Load the PDF

You start by loading a PDF using PyPDFLoader. This breaks the document into smaller chunks — usually paragraphs or sections — so they can be processed individually.

Think of this like slicing a book into digestible pages.

2. Create Embeddings

Each chunk is converted into a numerical vector using Azure OpenAI’s embedding model. These vectors capture the meaning of the text in a way that allows for semantic search.

It’s like turning each chunk into a fingerprint of its meaning.

3. Store Embeddings in Chroma

The vectors are stored in a vector database called Chroma. This lets you search for chunks that are most relevant to a user’s question.

Chroma is your searchable memory bank.

4. User Asks a Question

Now the user types a question — for example, “What is the main topic of the document?”

This is where the magic begins.

5. Retriever Finds Relevant Chunks

LangChain’s retriever takes the user’s question and searches Chroma for the most relevant chunks. It does this by embedding the question and comparing it to the stored vectors.

It’s like asking: “Which parts of the document are most similar to this question?”

6. Prompt Template Frames the Answer

The retrieved chunks are inserted into a prompt template. This template tells the language model: “Here’s some context. Now answer the question based on this.”

It’s like giving GPT-4 a cheat sheet before it answers.

7. Azure OpenAI Generates the Answer

The prompt is sent to GPT-4 via Azure OpenAI. The model reads the context and the question, then generates a natural-language answer.

Now the chatbot responds — intelligently and accurately.

What I Learned

- Azure OpenAI requires careful setup — especially with

deployment_nameandapi_version - LangChain is powerful but expects specific interfaces (like

embed_documents) - Prompt formatting matters! Without

{context}, the LLM won’t know what to answer - Chroma can cache or persist old data — using in-memory mode avoids stale results

Next Steps

I’m planning to:

- Show citations: Return the source chunk with each answer

- Handle multiple PDFs: Let users choose which document to query

- Add a web UI: Use Streamlit or Gradio to make it interactive

- Add memory: Let the chatbot remember past questions in a session

- Visualize embeddings: Plot similarity scores or cluster maps

Final Thoughts

This project was a great way to explore retrieval-augmented generation using Azure’s cloud models. If you’re working with enterprise documents or want to build smart assistants, this setup is a solid foundation.

Let me know if you try it — I’d love to hear what you build!

Leave a comment