Overview

I architect systems that evolve with purpose, designed to perform, adapt, and resonate with human intent, while delivering measurable value to clients and end users. This portfolio is a mosaic of strategic experiments and academic explorations across AI/ML, IoT, Blockchain, Cloud integration, and design. Each project is shaped by real-world use cases and built with an emphasis on translating complexity into clarity, aligning technology with both human-centered goals and business outcomes.

With over two decades in software engineering, I’ve worked across digital transformation, distributed computing, collaborative problem-solving, and program delivery. Along the way, I’ve developed a growing interest in data structures, statistics, and their role in shaping practical AI/ML systems. My current focus is on building systems that adapt, align with human needs, and remain open to iteration.

I value environments that encourage cross-disciplinary thinking, and I approach learning as a continuous process—unlearning and relearning as needed.

This ePortfolio offers a brief look into recent personal experiments and technology explorations. It’s not a full record and does not include prior roles or organizational titles. To respect company confidentiality and intellectual property, production work delivered for past employers is intentionally excluded. What’s here reflects the kinds of problems I’m currently engaged with and how I approach building thoughtful, functional systems.

Scroll through for snapshots of ongoing work, some are live on GitHub, and more are being added soon, or you can skip to credentials to view some of my latest learning goals and achievements.

Sentiment Analysis for Courier Service Optimization

(Sep’25)

As part of a strategic initiative to enhance service quality at ExpressWay Logistics, I designed and implemented a sentiment analysis system leveraging Azure OpenAI. The goal was to extract actionable insights from user-generated reviews across digital platforms to address key operational challenges—such as delivery delays, package integrity, and responsiveness to diverse customer demands. Using a curated dataset (courier-service_reviews.csv) containing review text and sentiment labels, I conducted exploratory data analysis (EDA), followed by model development and performance benchmarking across multiple machine learning and deep learning algorithms. Azure OpenAI was used to apply prompt engineering techniques for sentiment classification, enabling scalable and context-aware analysis of customer feedback. The final solution accurately identified positive and negative sentiments, empowering the company to refine its logistics strategy and improve customer satisfaction. This project demonstrated the impact of AI-driven feedback analysis in driving continuous service enhancement.

AI Threat Modeling Framework

(In progress)

(Independent exploration project)

This project focuses on designing a comprehensive AI threat modeling framework to identify, assess, and mitigate risks associated with deploying AI systems. By integrating structured threat analysis methodologies with AI-specific risk vectors – such as model manipulation, data poisoning, and adversarial attacks – the framework aims to support secure and responsible AI development. The solution is being built to assist teams in proactively managing vulnerabilities across the AI lifecycle, from design to deployment.

Industrial Safety

(Aug’25)

This initiative focused on developing a machine learning and deep learning–based chatbot designed to assist safety professionals in identifying potential risks based on incident descriptions. Leveraging a comprehensive dataset sourced from one of the largest industrial organizations in Brazil—and globally—the project addressed a critical need: understanding the underlying causes of workplace injuries and fatalities in high-risk environments. Through extensive exploratory data analysis (EDA) and model benchmarking, the team evaluated multiple algorithms to determine the most effective approach for accurately classifying and highlighting safety hazards. The resulting system empowers organizations to proactively mitigate risks, enhance safety protocols, and ultimately protect their workforce.

Stock Market News Sentiment Analysis and Summarization

(Jul’25)

Natural Language Processing: This project is focused on developing an advanced AI-powered sentiment analysis platform designed to enhance financial forecasting and investment strategy.

By systematically analyzing stock market data and automatically processing news articles to assess market sentiment, the system will generate weekly summaries that provide a consolidated view of market dynamics. These insights will support more accurate stock price predictions and enable financial analysts to make data-driven, strategic investment decisions—ultimately improving client outcomes and portfolio performance.

Dual-Channel Resonance Engine (DCRE)

(Independent exploration project)

The Dual-Channel Resonance Engine is a speculative AI architecture designed to harmonize structured cognition with affective resonance. Inspired by dual oscillation physics and bespoke intelligence, DCRE introduces two entangled channels—Structural Cognition and Affective Resonance—that co-regulate input and output through dynamic feedback loops.

Rather than compute answers, DCRE tunes intelligence. It uses protocols like intentive proximity mapping, entropy gates, and empathic realignment to align AI responses with human context, emotion, and intent. This architecture moves beyond retrieval, embracing fluid intelligence that listens, adapts, and reflects.

Use cases span context-aware search, narrative-sensitive agents, and creative collaboration, with evaluation metrics focused on resonance coherence, narrative continuity, and reflective fluency. DCRE is not just a system—it’s a philosophical stance: intelligence as resonance, not reduction.

A Symbolic Overview

Skills & Tools Used: BeSpokeAI, EthicalAI, Data Structures, Evaluation Metrics Design, Prompt Engineering, Intent Mapping & Feedback loops, Multimodal Alignment, Narrative-Aware AI modelling

This visual metaphor represents the DCRE as a layered system that harmonizes analytical cognition and emotional resonance. Two braided channels—one crystalline, one fluid—symbolize logic and empathy working in tandem. A central tuning element reflects the engine’s bidirectional protocol for aligning intention and interpretation, while magnetic fields illustrate empathic realignment across dynamic contexts. Fractal motifs evoke adaptive emergence, signaling the system’s sensitivity to nuance and its ability to evolve. At the core sits a reflective portal: DCRE’s philosophical anchor that prioritizes coherence over computation. Nested spirals suggest triadic expansion and ethical scalability—framing DCRE as an intentional intelligence framework built for complexity, clarity, and systemic impact.

Face Recognition

(Jun’25)

This project focuses on the development of a face recognition system, leveraging techniques from computer vision—a key domain within artificial intelligence. The system comprises two core components: A face detection module that accurately identifies and localizes facial regions within an image using image processing and deep learning techniques. A face identification model that matches detected faces against a pre-existing database to determine individual identities with high precision. The objective is to create a robust, end-to-end pipeline capable of real-time face recognition, suitable for applications in security, authentication, and user personalization.

Used Cars Price Prediction

(Apr’25)

Skills & Tools Covered:

EDA, Data pre-processing, model performance improvement, Hyperparameter tuning.

Developed a predictive model using deep learning and neural networks to estimate the price of used cars based on key factors such as vehicle age, mileage, brand, condition, and market trends. Applied data preprocessing techniques to clean and structure historical car sales data, ensuring accuracy in model training. Leveraged advanced machine learning algorithms, including convolutional layers and optimization techniques, to enhance predictive performance. The model enables users to make informed buying and selling decisions by providing precise price estimations, helping dealers, buyers, and financial institutions optimize their strategies in the used car market.

New Wheels – a case study

(Mar’25)

Analyzed declining sales trends for New-Wheels by examining customer feedback, ratings, and purchase patterns to identify key drivers behind reduced customer acquisition. Addressed the challenge of unstructured data by leveraging MySQL to organize and query critical business information, transforming raw flat-file data into actionable insights. Conducted an in-depth analysis to answer pressing business questions, highlighting correlations between customer sentiment, product performance, and sales impact. Generated visualizations to effectively communicate findings in the Quarterly Business Report, providing leadership with data-driven recommendations to enhance customer satisfaction, improve brand perception, and implement strategic measures for sustainable business growth.

Skills & Tools Covered:

Fetching and filtering techniques to query the data, Aggregating the data using aggregate and window functions, getting quicker insights using sub queries and case when functions, Joining the multiple tables using inner joins, left join, right join, creating mathematic calculations using formulas in SQL queries, creating mathematic calculations using formulas in SQL queries Create visualizations.

Credit Card Users Churn Prediction

(Feb’25)

Skills & Tools Covered:

EDA, data preprocessing, model performance improvement & final model selection, Bagging & random forest, Boosting, Model Tuning.

Developed a predictive model to analyze customer behavior and identify key factors influencing churn in credit card services. Conducted a comprehensive data analysis to uncover patterns related to spending habits, transaction frequency, credit utilization, and customer engagement. Leveraging machine learning techniques, the model accurately forecasts potential churn, enabling proactive retention strategies. By pinpointing the primary reasons behind customer attrition—such as service dissatisfaction, competitive offerings, or financial constraints—the project provides actionable insights to enhance user experience, optimize retention efforts, and improve long-term profitability for credit card providers.

Trade & Ahead

(Feb’25)

Developed a data-driven portfolio optimization strategy for Trade & Ahead’s clients by applying advanced cluster analysis techniques to stock price movements and financial indicators. Identified distinct stock groups with similar characteristics, enabling a structured approach to diversification. By categorizing assets based on volatility, performance trends, and risk profiles, the project enhances investment decision-making and portfolio construction. The insights generated allow Trade & Ahead to offer clients well-balanced portfolios that maximize returns while mitigating potential losses, fostering more stable long-term growth.

Easy Visa

(Jan’25)

Skills & Tools Covered:

EDA, data preprocessing, Customer profiling, Bagging Classifier, boosting classifier, Gradient boosting, XGboost, Stacking classifier.

Developed a predictive analytics solution to streamline the visa approval process by analyzing key applicant data. Applied ensemble learning techniques to build a robust classification model that identifies influential factors affecting visa outcomes, such as financial status, travel history, and documentation accuracy. The model enhances decision-making by recommending suitable applicant profiles for approval or denial, enabling a more efficient and transparent evaluation process. Insights from this analysis can help optimize visa policies, minimize processing delays, and improve overall approval accuracy.

Supervised Learning project

(Dec’24)

Developed a data-driven approach to identify key factors influencing booking cancellations for INN Hotels. Conducted an in-depth exploratory analysis to uncover trends related to customer behavior, seasonal variations, and external influences on cancellations. Built a predictive model leveraging supervised learning techniques to forecast cancellation likelihood in advance, enabling proactive decision-making. The insights from this analysis were used to formulate optimized policies for cancellations and refunds, improving revenue retention and enhancing customer satisfaction.

E-news Express Project

(Oct’24)

Leveraged statistical analysis, A/B testing, and data visualization to assess the effectiveness of a new landing page in driving subscriber growth for E-News Express. Key metrics such as conversion rates and time spent on the page were examined, alongside an analysis of language preference impact on user conversion.

Skills & Tools Covered:

Hypothesis Testing, a/b testing, Data visualization, Statistical inference

Food Hub

(Sep ’24)

Conducted exploratory data analysis to uncover trends in restaurant and cuisine demand, enabling a food aggregator company to enhance customer experience and optimize business strategies with actionable insights.

Skills & Tools Covered:

Python, Numpy, Pandas, Seaborn, Univariate analysis, Bivariate analysis, EDA, Business recommendations

↑ Post Graduate Program in Artificial Intelligence & Machine Learning

Data Analysis (EDA), Model Building, Training & Evaluation

(Mar’23)

Skills & Tools Covered:

Regression & Classification Algorithms. For a given dataset, Data visualization/Exploration, Data Preprocessing, Model Building, Performance evaluation. EDA, train model using NB and logistic regression (sklearn). Accuracy comparison. GDA, SGDA, Mini-batch. Feature reduction techniques, & train model on reduced features using NB and LR. Generative & Discriminative Logistic Regression. Decision Boundaries, Overfitting/Underfitting. Performance Evaluation. Decision Tress – Entropy and Gini Index. Lagrange Multiplier. SVM, Ensemble method, Kernels, Bagging, Random Forest, Boosting, AdaBoost, Confusion Matrix. Binning, Supervised discretization, proximity functions, PCA, Performance using RMSE, R2, Visualization. Clustering, K-Means.

Post Graduate Certificate in Artificial Intelligence & Machine Learning

ePortfolio (instructure.com) – from BITS Pilani

Agri-Tech

(Nov’22)

Skills & Tools Covered:

Production-level Cloud architecture, Decision making for compute and data scaling, IoT Core ECS Lambda and managed databases.

Industrial/Capstone project on IOT

A farming company needs to develop innovative farm water management. Developed an automatic sprinkler system based on soil and air data coming from embedded sensors. Simulated soil temperature and moisture sensors continuously feed data to the AWS Cloud for processing. The system also fetches air temperature and humidity readings of the farm location, from a weather API and a soil sensor to monitor the incoming soil and air readings, so as to control water sprinkler.

Blockchain Development – Ethereum

(Oct’22)

This project implements a very famous game known as Rock Paper and Scissors in the online context. In general, RPS is played in-person so actions can be synchronized, and disputes can be settled by discussion or by a third party. But synchronization is not easily possible online and using blockchain effectively can remove the need for a third party for dispute resolution. The same techniques are the underlying principles behind DeFi and a lot of other domains where blockchain is being used.

Skills & Tools Covered:

Implement a trustless system, Remix platform, develop contracts using solidity, Proof without knowledge.

Simple Blockchain

(Jul’22)

This project is mainly focused on key generation, digital signing, and transaction and block validations. This has basic blockchain semantics. It doesn’t follow bitcoin transaction’s multiple sender/receiver complexity. It stores a running balance like Ethereum, instead of a UTXO based structure like bitcoin.

Skills & Tools Covered:

Implementing cryptographic functionalities, transaction creation and its validation in the network, transaction processing. Chain validation and account validation.

Cloud DevOps – Infrastructure as Code

(Jun’22)

Step by step automation of Anomaly detection. Using Kinesis, SNS, EC2, S3, DynamoDB and lambda handler. In each step some of the services are provisioned through Cloud formation, which in turn automates the mentioned tasks. In the next step, a few more services such as CodeDeploy are provisioned to increase the automation part. In the last Task, the lambda handler part will be provisioned by using cloud formation.

Skills & Tools Covered:

Automating the setup of anomaly detection, Kinesis, DynamoDB, SNS using CloudFormation templates, provisioning S3 bucket using CloudFormation, deploying the contents of S3 using CodeDeploy into the EC2 instance, Provisioning the Lambda handler using Cloud Formation, making the entire provisioned setup for anomaly detection work.

Cloud Managed Services and Docker Containers

(May’22)

Built a system that streams stock pricing information for various stocks at different times and then notifies the stakeholders when the values cross specific points of interest (POIs). Used the Yahoo Finance APIs to query the running price of stocks and general information like 52-week high/low values. Mimic it using historical data for an older time period and stream it. The aim is to automate the service setup used in Anomaly detection Project using CloudFormation.

Skills & Tools Covered:

AWS EC2, Kinesis, Lambda handler, dynamoDB, SNS. Running a Python script on an AWS EC2 instance to pull data using the AWS boto3 API and Kinesis client to push data from the EC2 instance to a Kinesis Stream. Configuring AWS SNS to publish the notification via email. Writing an AWS Lambda function to identify the POIs and push the notifications through SNS Using the same Lambda function to push the POI data to a DynamoDB database.

IoT Cloud Processing & Analytics

(Apr’22)

The project uses AWS services such as IoT core and DynamoDB along with python. The project simply focuses on a key area of data aggregation and anomaly detection based on the rule created by the user. The idea is to implement everything on your local machine using boto3 and AWSIoTSDK.

Skills & Tools Covered:

boto3 & AWSPythonIoTSDK: Python simulation code using SDK to simulate the data. IoT core: thing creation, viewing data on MQTT, setting up rule to push data from MQTT to DB DynamoDB: creation of tables to store raw data, aggregate data and anomaly data. Create the tables through the boto3 client or using the console. Writing python code to perform the above mentioned operation such as creating the DB, pulling raw data and performing aggregation as well as anomaly detection. Put the data back into DynamoDB using the boto3 client.

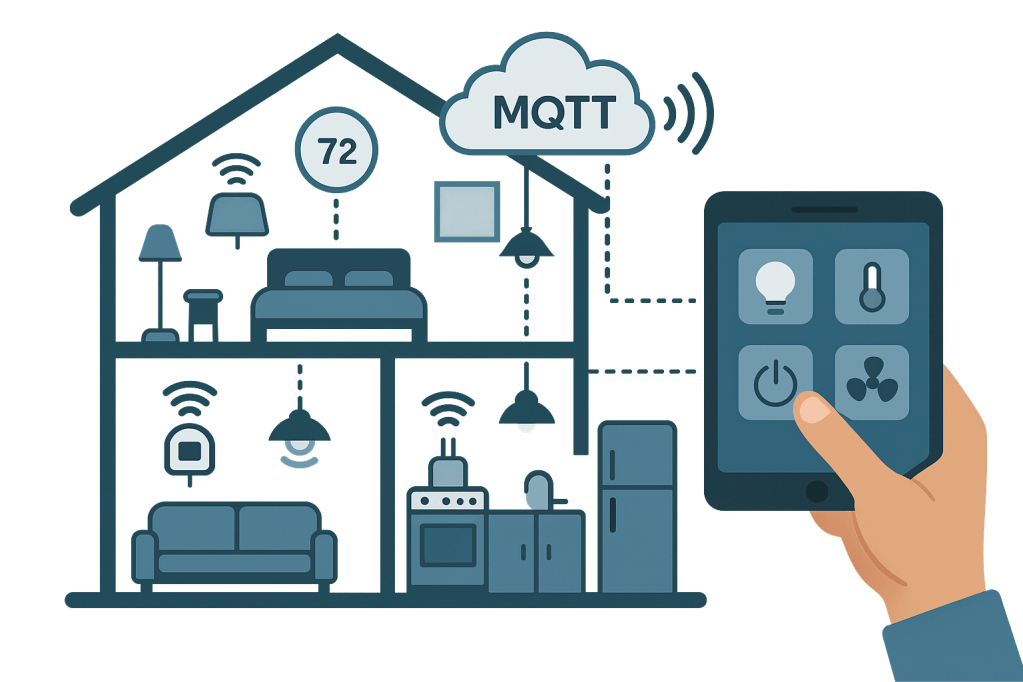

IoT Devices & Networking

(Feb’22)

This project mimics a simple smart home setup using MQTT as a mode of communication. The idea is to enable/register the devices in different rooms and then provide a control mechanism for the user to perform different operations on those devices.

Skills & Tools Covered:

Paho mqtt: use mqtt client in real-time scenario and write wrapper function over what is already available. Create an efficient topic structure to handle the messages MQTT is a pub-sub model. Use python to perform data handling on the received messages. Server as an interface to pass on the communication from user to the device.

Distributed Systems

(Jan’22)

This project mimics a simple distributed data store that uses a consistent hashing scheme to map key-value data to specific database nodes. It uses the technique of virtual nodes to implement consistent hashing in a master less data fetch setup. The project involves computing a per-node virtual node mapping, inserting and fetching key-value data, and addition & removal of nodes.

Skills & Tools Covered:

Masterless distributed Database, data insertion, data fetch, dynamic batch-updates of python data structures, mainly dictionaries. Python language features, such as: the random module utilities and math module utilities.

Software & Database Design

(Dec’21)

The weather data system supports storage of temperature and humidity values from various devices, in a MongoDB database. It also has a device registry, and supports categories of users. The aim is to read device and user configuration information from config files, and populate the database with access control information, along with the actual data. Also aggregate data periodically, and display reports based on query criteria.

Skills & Tools Covered:

Organize information from formatted text (CSV) files, populate MongoDB collections with such structured information, query MongoDB collections based on user authorization, Model layer over a DB collection, aggregate data periodically, generate daily reports (with display) based on date ranges, range queries, working with Date data type.

↑ (Advanced Certification in Software Engineering for Cloud, Blockchain & IoT.)

Certifications & Learning Journeys

- IBM RAG and Agentic AI (IBM Skills Network – In Progress)

- Generative AI for Business with MSFT Azure OpenAI Program (Microsoft Azure & GL)

- Machine Learning with Python (IBM Skills Network Certificate via Coursera)

- Deep Learning Essentials with Keras (IBM Skills Network Certificate via Coursera)

- Advanced Deep Learning Specialist (IBM Skills Network Certificate via Coursera)

- Advanced Certification in Software Engineering for Cloud, Blockchain & IoT. (IIT Madras & GL)

- Microsoft Certified: Azure AI Fundamentals

- Microsoft Applied Skills: Create an intelligent document processing solution with Azure AI Document Intelligence

- Microsoft Certified Professional (MCP)

- My Microsoft Learn Profile

- Master of Data Science (Global) by Deakin University (In Progress)

- Post Graduate Program in Artificial Intelligence & Machine Learning (McCombs School of Business & GL, 2025)

- Master of Computer Application

- B.Sc. (Physics, Statistics, Computer Science)

Thank you for taking the time to explore this portfolio!

It reflects a selection of recent technical and design explorations focused on system adaptability, AI/ML integration, and human-centered problem solving. If a project aligns with your research interests, hiring goals, or collaborative focus areas, I’d be glad to connect. Feedback is always welcome, it helps refine not just the work, but the thinking behind it.